And the winner is…

In the previous post I announced that one person will win a copy of the Brazilian Portuguese translation of JavaScript Patterns. So here's the winner:

He wins a signed copy of "Padrões JavaScript". Waiting for your mailing address, Lou 🙂

Update: Lou Doesn't speak Portuguese, so he gets an English copy. The second winner is:

Update: Monty is not too proud of his Portuguese skills either, so third is

Asen is an awesome JavaScripter, who actually reviewed the book (thanks a million!) and he already has a copy. Next.

How I picked the winner

By writing some JavaScript in the console, of course.

The winner was to be randomly picked from all those who retweet my tweet or post a comment in the announcement. So I had to collect those.

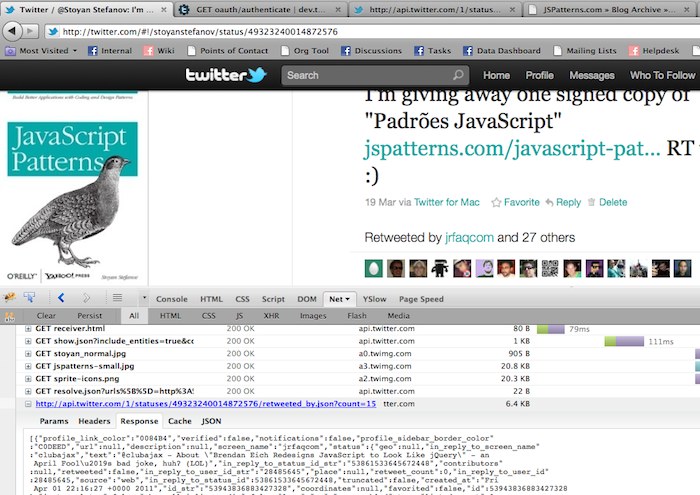

The tweet page says there has been 27 retweets (worded a bit like 28, but looks like it's 27). The page only shows about 15 people though and I need all of them. Given how Twitter doesn't let you search older stuff, I was afraid it was too late. I had to check the API first. I was expecting I can hit a few URLs and get the data I need. Tough luck. All these auth keys, tokens, secrets and stuff got me floored.

Luckily Twitter's UI is also using the APIs. Checking the network traffic I was able to spot the request I need!

The URL is:

http://api.twitter.com/1/statuses/49323240014872576/retweeted_by.json?count=15

I only needed to change the count to something over 27, so I made it 30. Lo and behold I got the data!

The rest of the stuff I did in Safari's Web Inspector console.

Visiting the URL:

http://api.twitter.com/1/statuses/49323240014872576/retweeted_by.json?count=30

We have a JSON array as a document.

>>> var a = document.body.innerHTML

>>> a

"<pre style="word-wrap: break-word; white-space: pre-wrap;">[{"profile_link_color":"0084B4","verified":false,"not....]</pre>"

Safari puts all in a PRE behind the scenes, so this is how we get the data:

>>> var source = $$('pre')[0].innerHTML;

>>> source

"[{"profile_link_color":"0084B4","verified":...]"

eval() it:

>>> source = eval(source) [Object, Object...] >>> source.length 27

Sounds right. Now let's move all usernames into a new array using the new ECMA5 forEach fancy-ness:

>>> var all = [];

>>> source.forEach(function(e){all.push(e.screen_name)})

>>> all.length

27

>>> all

["jrfaqcom", "gustavobarbosa", "gabrielsilva", ...."vishalkrsingh"]

Blog comments

I had 4 comments on the original post. WordPress puts all comments in a div with class commentlist, so this allows us to grab all comments:

>>> var comments = $$('.commentlist cite a')

>>> comments.length

4

Now let's only grab the names, they are in the href's innerHTML:

>>> var all = [];

>>> comments[0].innerHTML

"Fabiano Nunes"

>>> comments.forEach(function(e){all.push(e.innerHTML)})

TypeError: Result of expression 'comments.forEach' [undefined] is not a function.

Eh? What? Oh, the list of HREFs is not an array but a NodeList:

>>> comments [ <a href="http://fabiano.nunes.me" rel="external nofollow" class="url">Fabiano Nunes</a> , <a href="http://www.gabrielizaias.com" rel="external nofollow" class="url">Gabriel Izaias</a> , <a href="http://www.jrfaq.com.br" rel="external nofollow" class="url">João Rodrigues</a> , <a href="http://www.jrfaq.com.br" rel="external nofollow" class="url">João Rodrigues</a> ] >>> comments.forEach undefined

So, list of nodes converted to array:

>>> comments = Array.prototype.slice.call(comments)

Now forEach is usable:

>>> comments.forEach

function forEach() {

[native code]

}

>>> comments.forEach(function(e){all.push(e.innerHTML)})

So we have a list of all names. Lets serialize it, so it can be pasted to the other window where we had the Twitter data.

>>> all ["Fabiano Nunes", "Gabriel Izaias", "João Rodrigues", "João Rodrigues"] >>> JSON.stringify(all) "["Fabiano Nunes","Gabriel Izaias","João Rodrigues","João Rodrigues"]"

(Simple array join and then string split will do too in this simple example)

All together

Back to the twitter window. Deserializing the comments array:

>>> comments = JSON.parse('["Fabiano Nunes","Gabriel Izaias","João Rodrigues","João Rodrigues"]')

["Fabiano Nunes", "Gabriel Izaias", "João Rodrigues", "João Rodrigues"]

Merging the two arrays

>>> all = all.concat(comments); ["jrfaqcom", "gustavobarbosa", ...."João Rodrigues"] >>> all.length 31

Perfect. 31 entries. Just as many as the days in March when I announced it. So let's take the 19th array element to be the winner.

But shuffle the array a bit first.

Suffle

Sorting the array by randomness. (I shuffled and reshuffled it three times, just because.)

>> all.sort(function() {return 0 - (Math.round(Math.random()))})

["ravidsrk", "anagami", "lpetrov", ...]

And the winner is:

>>> all[18] "LouMintzer"